Ever noticed that while training neural networks, the loss stops decreasing, and weights don’t get updated after a certain point? Understanding this hitch involves looking at how we optimize loss using gradient descent, adjusting weights to find the lowest loss.

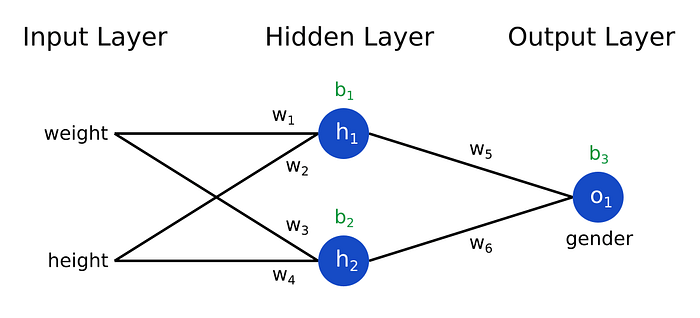

Let’s picture a neural network with the aim of minimizing the loss, called O1.

To update weights through gradient descent, we need to know how each weight affects the output. During backpropagation, we find that O1 is influenced by W5 and W6, and h1 is affected by w1,w2, with w1 also affecting O1 through a chain reaction.

Calculating the rate of change to O1 concerning w1 would look something like this using chain rule.

Rate of change of O1 (wrt w1)= rate of change of O1(wrt w5) * rate of change of w5 (wrt h1) * rate of change of h1 (wrt w1)

In math would look like

So we end up multiplying all these gradients or derivates, however when we notice h1 here might be a sigmoid function whose gradient is always between (0–0.25).

so as the number of layers increase and this keeps getting back propagated the derivative becomes very very small which makes negligible effect to the weight w1.

How do we know this is happening?

- No changes to Loss.

- Plots of weights against epochs.

Here are some down-to-earth solutions:

- Simplify the Model: Decrease the number of layers. But be careful; this might lose the essence of why you used a neural network in the first place.

- ReLU Activation Function: Use the ReLU function to tackle negative values, setting them to 0. Think of it like flipping a switch on or off. Watch out for “Dying ReLU,” though; sometimes everything becomes zero. Leaky ReLU can help here.

- Proper Weight Initialization: Start off with good weight values. It’s like giving your neural network a solid foundation to build upon.

- Batch Normalization: Keep things in check by normalizing inputs within each mini-batch. It helps maintain stable gradients, overcoming the vanishing gradient problem.

- Residual Network (ResNet): Create shortcuts in your neural network to let information flow directly across layers. This helps a lot in dealing with the vanishing gradient problem.

So, if your neural network seems stuck, try these fixes. They’re like giving your network a little nudge, helping it learn more effectively.