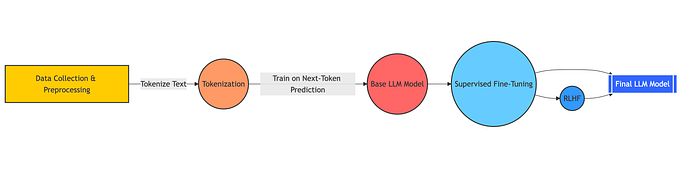

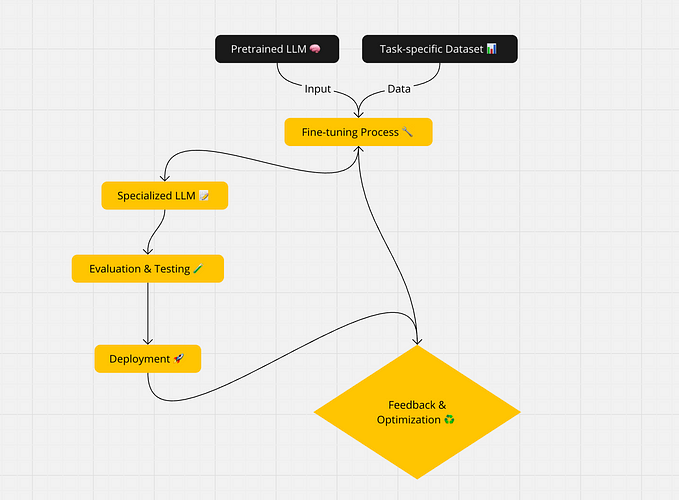

When fine-tuning a Large Language Model (LLM), instead of adjusting all the original weights, we can train a smaller set of new weights. Once trained, we add these new weights to the original ones during inference. This approach is efficient because it reduces the number of weights we need to update, thanks to Low-Rank Adaptation (LoRA).

What is LoRA?

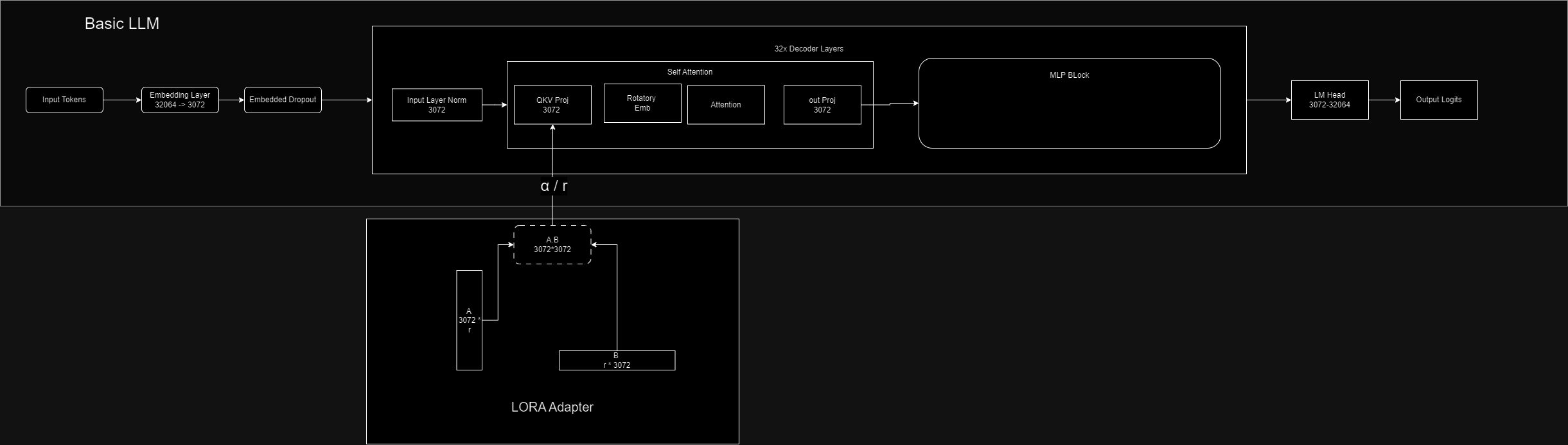

Think of the weight matrix in our base model as W with dimensions n × n. LoRA creates two smaller matrices, A and B, through matrix factorization:

- A has dimensions n × r

- B has dimensions r × n

Multiplying A and B gives us a matrix of the same size as W, but since r is much smaller than n, we’re dealing with fewer parameters. This technique allows us to apply LoRA to different layers, each potentially having its own rank r.

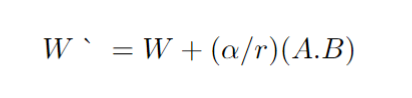

Instead of modifying the entire model, LoRA fine-tunes only the new matrices AAA and BBB, leaving the original weight matrix WWW unchanged to preserve the model’s core knowledge. During training, these smaller matrices are updated for the specific task, and at inference, their contribution is added back to the original weights as:

Here, α is a scaling factor that controls the contribution of the new weights.

Key Concepts and Considerations

1. Choosing the Right Rank (r)

- The rank r determines the size of the low-rank matrices A and B .

- A smaller r means fewer parameters but might limit the expressiveness of the adaptation.

- A larger r increases expressiveness but can lead to higher computational costs.

- Typically, r is chosen empirically based on:

- The complexity of the task.

- The size of the dataset.

- Computational constraints.

2. Scaling Factor (α)

- α adjusts the influence of the new weights A×B.

- Higher α values amplify the adaptation but can risk overriding the base model’s knowledge.

- Lower α values ensure the adaptation is more subtle.

- Setting α appropriately requires experimentation and is often proportional to r.

3. LoRA Has Its Own Dropouts

- LoRA incorporates dropout to prevent overfitting while training the A and B matrices.

- Dropout randomly zeroes out elements in A or B during training, ensuring that the model generalizes well to unseen data.

4. Quantizing the LoRA Model

- Once the LoRA adapters are trained, the entire model (including the LoRA weights) can be quantized.

- Quantization reduces the model’s precision (e.g., to 4-bit or 8-bit weights) to save memory and accelerate inference, making it suitable for deployment on edge devices.

5. Layer-Specific Ranks

- LoRA allows setting different ranks r for different layers in the model.

- For example, higher ranks can be assigned to layers critical for the task, while lower ranks can be used for less important layers.

6. Task-Specific Fine-Tuning

- LoRA excels in tasks requiring adaptation to specific domains (e.g., medical texts or legal documents) or tasks (e.g., summarization, translation).

- By focusing on adapters, the fine-tuning process becomes faster and more efficient compared to traditional methods.

7. Efficiency and Parameter Sharing

- Unlike full fine-tuning, LoRA fine-tunes only the additional matrices (A and B ), leaving the original weights untouched.

- This makes it easy to share or reuse adapters across multiple models and tasks without needing to store multiple copies of the base model.

Key Libraries

To implement LoRA, we’ll use the following libraries:

pip install transformers peft accelerate bitsandbytes- Transformers: Provides pre-trained models, tokenizers, datasets, and pipelines.

- PEFT (Parameter-Efficient Fine-Tuning): Offers methods like LoRA for efficient fine-tuning of LLMs.

- Accelerate: Helps in deploying and training LLMs across different devices (CPU, GPU, TPU).

- Bitsandbytes: Useful for low-bit quantization (e.g., 4-bit, 8-bit), reducing model size.

Implementing LoRA

Here’s how you can set up and apply LoRA to your model:

from transformers import AutoModelForCausalLM, AutoTokenizer

from peft import LoraConfig, get_peft_model

model_name = "microsoft/Phi-3-mini-4k-instruct"

# Load the base model

base_model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map="cuda", # Choose device (CPU or GPU)

load_in_4bit=True, # Quantize to 4-bit for efficiency

trust_remote_code=True,

torch_dtype="auto"

)

# Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained(base_model)

# Define target modules for LoRA

target_modules = [

'model.layers.0.self_attn.o_proj', # Output projection of self-attention

'model.layers.0.self_attn.qkv_proj' # Projections of Q, K, V

]

# Configure LoRA

lora_config = LoraConfig(

r=16, # Rank of LoRA

lora_alpha=32, # Scaling factor

target_modules=target_modules, # Layers to apply LoRA

lora_dropout=0.7, # Dropout rate

bias="none",

task_type="CAUSAL_LM",

)

# Apply LoRA to the model

model = get_peft_model(base_model, lora_config)

# Print the number of trainable parameters

model.print_trainable_parameters()Handling Changes to Output Layers

In some cases, you might need to modify the output layer of your LLM, such as adding new tokens specific to your application. This change affects both the model and the tokenizer.

Here’s how you can add special tokens:

# Define new special tokens

special_tokens_dict = {'additional_special_tokens': ['<instruction>', '<story>']}

# Add special tokens to the tokenizer

num_added_toks = tokenizer.add_special_tokens(special_tokens_dict)

# Resize the model's embeddings to match the tokenizer

model.resize_token_embeddings(len(tokenizer))After training your LoRA model with these new tokens, save your LoRA adapter, tokenizer, and other necessary files.

Loading the Model for Inference

When loading the model for inference, it’s crucial to ensure compatibility between the base model and the LoRA weights. Here’s the correct order:

- Load the saved tokenizer.

- Load the base model.

- Resize the model’s token embeddings to match the tokenizer.

- Load the LoRA weights onto the base model.

from transformers import AutoModelForCausalLM, AutoTokenizer

from peft import PeftModel

# Load the tokenizer

tokenizer = AutoTokenizer.from_pretrained("path_to_saved_tokenizer")

# Load the base model

model = AutoModelForCausalLM.from_pretrained("path_to_base_model")

# Resize token embeddings

model.resize_token_embeddings(len(tokenizer))

# Load LoRA weights

model = PeftModel.from_pretrained(model, "path_to_lora_weights")By following this order, you ensure that the base model is compatible with the LoRA weights, preventing any size or dimension mismatches.