Ever wondered how data gets its makeover before revealing its insights? Enter the battleground of data refinement, where normalization and standardization go head-to-head. Think of it as a compelling tale of two methods, each with its unique charm.

While many may glance over these techniques, dismissing them as ‘easy,’ the real challenge lies in grasping the essence of their impact. Yes, the process may seem straightforward, but unlocking the true potential requires a deeper understanding.

No worries, we’re skipping the mind-numbing formulas — no need to flashback to your stats class trauma! Let’s dive into the real talk — what the heck do normalization and standardization actually do for our data?

Normalization:

It's like giving each feature a fair shot in the modelling game. Instead of letting larger values dominate, it scales features to a 0 to 1 range. Picture two points before normalization on a 2D plane for distance covered and calories burnt: (3.8,1300) and (3.9,800). Now, imagine you’re using the k-nearest neighbours (KNN) algorithm, which relies on Euclidean distance to measure the proximity between data points. Euclidean distance is sensitive to differences in scale, meaning features with larger numerical ranges can disproportionately influence the distance calculation.

In our example, without normalization, the Euclidean distance would be more significantly influenced by the “calories burned” feature due to its larger scale. This could lead to biased predictions, as the algorithm might favour points that are closer in the “calories burned” dimension, overlooking potentially relevant patterns in the “distance covered” feature. By normalizing the data we are fitting our data within the range of 0 to 1. This ensures that no feature holds disproportionate influence over others, promoting fair comparisons.

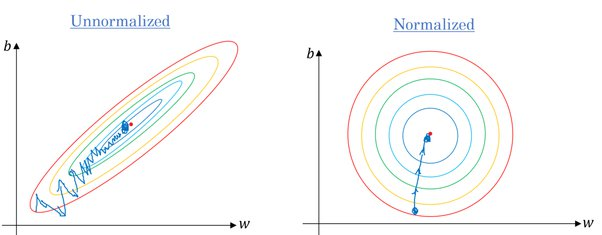

Unnormalized data also affects models which use gradient descent, especially when features exhibit different scales. This can cause uneven convergence rates, prolonging the algorithm’s convergence along dimensions with larger-scale features. Overshooting and oscillations become possible, particularly if one feature dominates due to its scale, potentially impeding the overall convergence. Additionally, slower learning in dimensions with smaller-scale features may lead to suboptimal convergence.

So when to use Normalization?

- When your models depend on distance metrics (KNN or K-means clustering)

- Algorithms where optimizations are involved (Gradient-based)

- Neural Networks e.t.c

- When dealing with changing data. It ensures that features are consistently scaled to a standard range), which is particularly advantageous in dynamic datasets where the scale of features may vary over time.

Some points to be considered before Normalizing your data.

- Normalization is sensitive to outliers, particularly when there are extreme values in the dataset. Outliers, being data points significantly different from the majority, can exert a disproportionate impact on the scaling process during normalization. This influence can potentially distort the overall scaling, affecting the representation of the majority of data points.

- If your data exhibits non-Gaussian characteristics, using normalization methods that assume normality may not provide optimal results. (Would try to write more on this point in my upcoming posts)

Thanks for reading!

(Will be covering Standardization in a separate story)